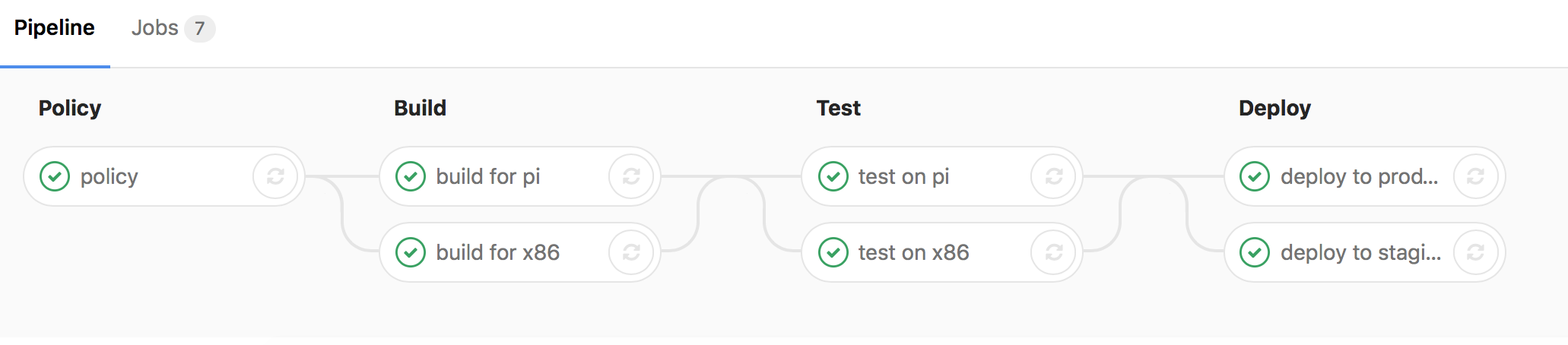

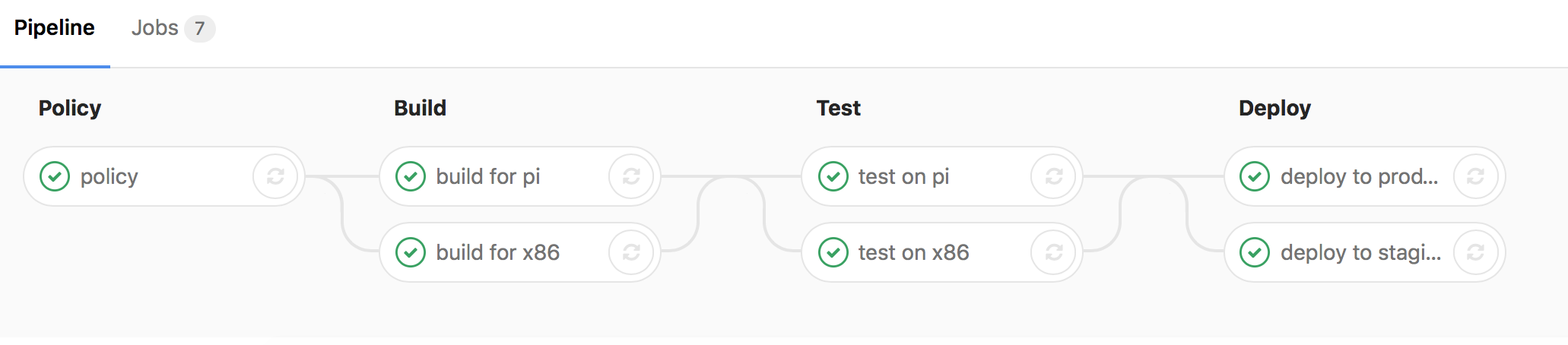

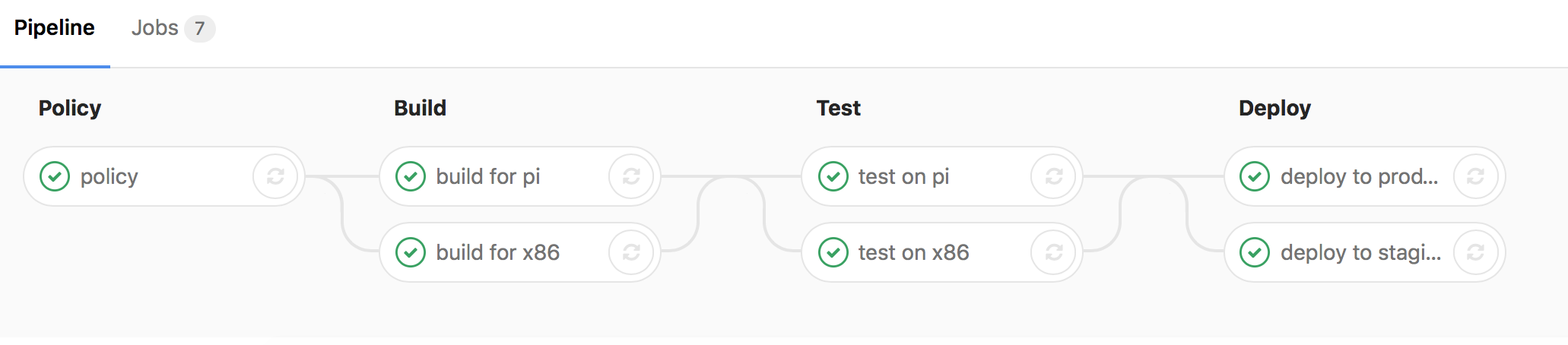

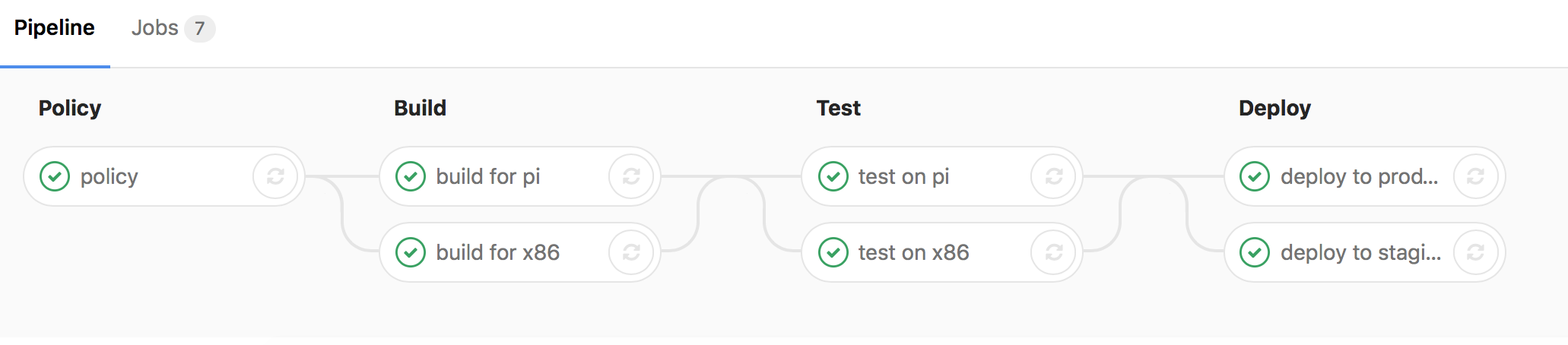

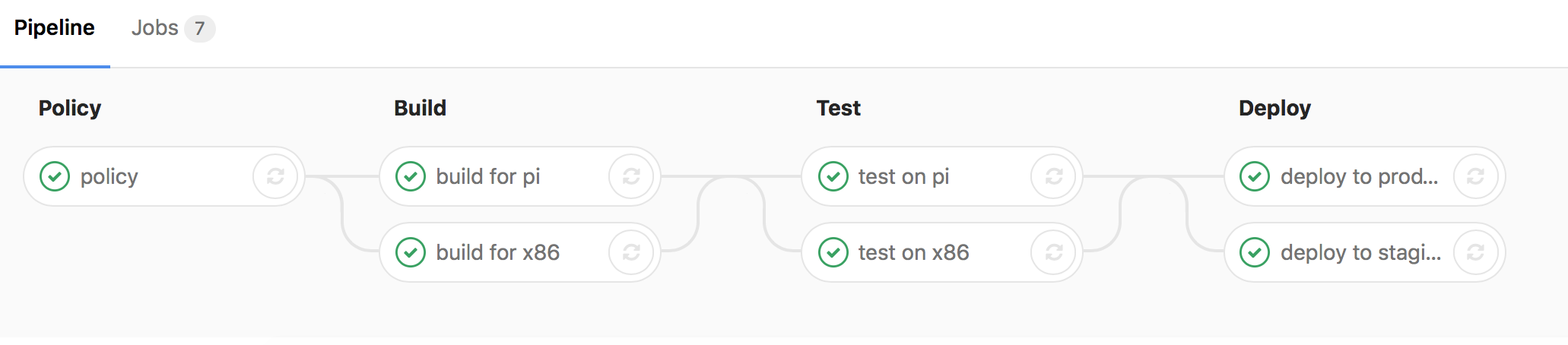

name: inverse class: center, middle, inverse layout: true .header[.floatleft[.teal[Christopher Biggs] — DevOps for Dishwashers].floatright[.teal[@unixbigot] .logo[@accelerando_au]]] .footer[.floatleft[.hashtag[LastConf] Sep 2017]] --- name: callout class: center, middle, italic layout: true .header[.floatleft[.teal[Christopher Biggs] — DevOps for Dishwashers].floatright[.teal[@unixbigot] .logo[@accelerando_au]]] .footer[.floatleft[.hashtag[LastConf] Sep 2017]] --- layout: true .header[.floatleft[.teal[Christopher Biggs] — DevOps for Dishwashers].floatright[.teal[@unixbigot] .logo[@accelerando_au]]] .footer[.floatleft[.hashtag[LastConf] Sep 2017]] --- template: inverse # DevOps for Dishwashers ## Bringing grown-up practices to the Internet of Things .bottom.right[ Christopher Biggs, .logo[Accelerando Consulting] <br>@unixbigot .logo[@accelerando_au] ] --- class: bulletsh4 # Who am I? ## Christopher Biggs — .teal[@unixbigot] — .logo[@accelerando_au] .leftish[ #### Brisbane, Australia #### Former developer, architect, development manager #### Founder, .logo[Accelerando Consulting] #### Full service consultancy - chips to cloud #### ***IoT, DevOps, Big Data*** ] ??? G'day folks, I'm Christopher Biggs. I've been involved off and on with embedded systems since my first professional role over 20 years ago, and nowadays I run a consultancy specialising in the Internet of Things, which is the new name for embedded systems that don't work properly. We work with companies developing IoT devices to help them choose the right technologies and practices to build test and deploy their products. Now I've spoken in the past about the state of security in IoT, and while I agree that it's pretty awful, I'm not pessimistic. I made some predictions about how bad things could get, and what kinds of action would be needed, and the bad and good news is that these are coming true. I'm seeing the space rapidly mature and all the right things are being done to address the challenges. --- layout: true template: callout .crumb[ # Agenda ## Problems ] --- # Why Devops? ??? Earlier this year I talked about the Internet of Scary Things, including here in Brisbane at the Internet of Things Meetup. That presentation is about how IoT is like the Wild West right now, and why DevOps is a must-do for IoT. Traditionally embedded systems have been developed carefully and conservatively, and had long lifecycles. In my experience you need those long lifecycles to cope with the generally awful tooling in the embedded space, which also apparently traditional. These days, the internet of things brings the contradictory requirement of products that are set in stone, sometimes quite literally, but which connect to the wild Internet where sometimes a day can be an age. So *this* talk is going to be about the *how*. How *you* can apply best practices from grown up computers to the challenges of building and managing internet connected embedded systems. --- # Why Dishwashers? ??? I've called this talk DevOps for Dishwashers because recently the tale of an internet connected dishwasher made the news. A lot of people laughed because why would a dishwasher need to be on the internet. It took about 10 years for us to go from personal computers being networked only at need, to us considering any computer that isn't networked all the time to be little more than a warm rock. And I expect the same for every other electric appliance. In the long term, I think the very word computer will become archaic, because everything will be a computer. --- # *"Software is eating the world"* ## .right[-- Mark Andreesen] ### Wall St Journal, Six years ago last month. ??? Software, as Mark Andreesen predicted, really truly will have eaten the world. This will have a lot of effects, but the one I want to focus on today is that we must rethink our concept of quality in the embedded space. The price of an amazing future everything can interact with everything else, is that everything might interact with everything else. --- layout: true template: callout .crumb[ # Agenda ## Problems ## DevOps? ] --- template: inverse # Interlude: What do I mean by "DevOps"? ??? Actually, before I go too far, I should define what I mean by DevOps. This is one of those terms that has been misused so much that it's now dangerous to use it without confirming that the listener hears what you mean. --- .fig40[ ] .spacedown[ ## DevOps is not a ***thing you do***, ## it's the ***way you do things***. ] ??? DevOps is not a thing you do. DevOps engineer is not a job title. There's no such thing as a DevOps team. It's an easy mistake to make, in fact I once held the job title Head of Devops. What DevOps **is**, is accepting that development and operations are part of a spectrum, and that drawing a sharp line between them is counterproductive. --- .fig40[ ] .spacedown[ ## *Empower* ***everyone*** ## *to maximise* ***value.*** ] ??? My definition of DevOps is: Evolving a culture and a toolset that empowers everyone to effectively maximise value through radical transparency and extreme agility. --- layout: true template: callout .crumb[ # Agenda ## Problems ## DevOps? ## Solutions ] --- template: inverse # *"When every Thing is connected, **Everything** is connected"* ## .right[-- Me] ??? So today I'm going to look at how we pay the price of a connected future. The body of this presentation is about the practical things you can do to build quality products for the internet of things. I'll look at the lifecycle of an IoT product from inception to retirement, and what you can be doing to get the best outcomes at every stage. The three areas I'm going to look at are firstly choosing platforms that support rather than hinder quality outcomes, secondly shaping your development and quality practices to foster agility while preserving reliability, and thirdly working with your data in ways that promote interoperability and reusability. --- layout: true template: callout .crumb[ # Agenda # Landscape ] --- # Welcome to the Internet of Things ## pop. 10 Trillion ??? In just three score years and ten, the span of a single human lifetime, we saw the ratio of computers to people increase by around one order of magnitude per decade. We went from one per country, through one each for the fortune 500, one for every business, one for every person, to the present day with considerably more than one per person in industrialised world. In the next decade I expect another dectupling, and at some point after that the question will become meaningless. Everything will be a computer. Every. Thing. Hence, the Internet of Things, a world where humans are a trace impurity in a living network of machines. --- layout: true template: callout .crumb[ # Agenda # Landscape # Challenges ] --- .fig30[ ] .spacedown[ # Solve the next problem, not the last one] ??? Back about a century ago, one pundit predicted that civilisation was about to grind to a halt, because trend analysis showed that very soon all available single women would be employed as telephone exchange operators, and thus no further rollout of the telephone network would be possible. If you wonder how we're going to curate and maintain a thousand devices per person, you're making the same kind of extrapolation error as our telephone operator pundit. --- # Strategy is adapting your behaviour to circumstances. ??? When a computer cost 3 months wages, you shepherded it carefully. When computers cost less than a cup of coffee, they become consumables, bought and sold by the bushel. They're sheep, not sheepdogs. --- class: bulletsh4 .crumb[ # Landscape # Challenges ## Risks ] # Everything is awful .bottom.right[ Do you want to know **more**?<br> "The Internet of Scary Things" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)] ??? I could go on for hours about the security landscape in the internet of things, and in fact I've spoken on the subject in the past. The one-slide precis is that that bad people want to either steal your stuff, or break your stuff. It doesn't have to be many bad people, another side effect of everything being connected is that if you are a terrible person, you have the opportunity to be terrible to vastly more victims. --- class: bulletsh4 .crumb[ # Landscape # Challenges ## Risks ] .fig30[ ] .spacedown6[ # Everything is awful ## and the awful is on fire] ``` curl http://my-dishwasher/../../../../../../../../../../../../etc/shadow ``` ??? I don't want to go off on a tangent about security in particular, but I find that the underlying causes of security problems in IoT are an instructive microcosm of the wider challenges. Last October, the Mirai worm spread around the globe because of well known default passwords that never get changed. In April we got the endlessly entertaining story of the compromised internet dishwasher, which demonstrates how many developers aren't trained well enough to avoid common and completely avoidable pitfalls. The downside of ubiquitous communications is that there are no safe spaces. June's WannaCry malware outbreak showed us that when everything is software, everything has to be maintainable. July's second coming of the much more damaging successor hammered the point home. That particular worm shut down a global courier for over a month, cost a container shipping company something over three hundred million dollars, and wake up people, it threatened the chocolate supply. If you can't patch it, its no longer safe to own it. --- .crumb[ # Landscape # Challenges ## Risks ## Desiderata ] .fig50[  ] .spacedown[ # Desiderata] ??? So lets summarise what I think a response to all these faceplants should look like, then we'll go into detail on each point. --- # Select appropriate tools and platforms ??? First, choose the right platform and tool. --- # Comprehensive identity management ??? Next, make your identity and security part of your framework so that you only build it once. --- # Automate for developer and user convenience ??? Automate everything you possibly can. --- # Testing and testability kept front-of-mind ??? Design for testability. --- # Train, and Audit, and keep doing both ??? Train your teams. Share skills, watch youtube videos, get consultants, go to conferences. Whatever your budget there's a way to improve. --- # Monitor and react (automatically) ??? And lastly maintain awareness, and use that awareness to respond rapidly. --- layout: true template: callout .crumb[ # Agenda # Landscape # Challenges # Solutions ## Platforms ] --- template: inverse # Platforms ??? So lets talk about platforms. Jez Humble who literally wrote the book on Continuous Delivery gave a keynote at Agile Australia in Sydney this June where he told a story about HP's printer teams. Their cost of engineering was off the chart, yet quality was awful, and part of the reason was they were spending over a quarter of their time porting their libraries to new platforms over and over because pretty much every printer model used a different processor. They moved to a common architecture and massively reduced their reengineering cost. They could spend recovered time on innovation and quality. --- # People are more expensive than circuits ## .right[(Sorry, robots)] ??? My point here is that every hardware business has a person whose job is look at every component and ask "can we delete that to save half a cent per unit". This is a person who's empowered to remove customer value. The correct question when designing your hardware is will this hardware platform add value, in terms of resilience, longevity and enabling quality. --- # Hardware is DevOps too ## .right[(Robots, I hope this makes it up to you :)] ??? That's right, your platform is part of your devops culture. Your goal should be to select a platform that supports your devops way of doing things --- # Open and well supported ??? This means that openness and friendliness are the first things you should look for. So, select a processor and platform that has good development tools and will likely be around for a long time. --- # Case study: .blue[**ARM v7**] and .red[**Debian Linux**] ??? Thanks to the demand from Android phones and TV media boxes, there's a huge variety of ARM systems out there which are similar enough that hardware differences aren't really important. You can develop to the one architecture and select an appropriate board from five bucks up. --- .fig40[ ] .spacedown[ # Meet the #3 top-selling computer of all time ] ??? In a number of my projects we work with Raspberry Pi as the development platform. This brings the convenience of a huge selection of platform tools and a great developer community. We can use these in the lab, and for CI workers, and even for the first hundred or so product units. Then the time comes to scale up, there's a wide selection of other ARM designs to buy or build. Here's one that starts at seven bucks. --- # Artisanal free-range small-batch Linux? ## No. ??? The same goes in software. Think about what happens next time a zero-day exploit goes epidemic on the Internet. The top tier vendors probably have a patch out in a day or two. If you picked some hipster linux distribution that has thirty users and one maintainer, you may never see a patch. --- # Without the Internet, it's just a Thing. ??? I also think it's important for devices to be online as much as possible. Power and network constraints may mean that devices can't afford to be online continuously, and this is OK, but you do need to think about how to support DevOps processes within these constraints. --- # *"Is there anybody out there?"* .right[-- Pink Floyd<br>(also, my lighting controller)] ??? Plan to have two way communication with your devices. Forget about one-way communication channels, they may be cheaper but you'll regret it. --- # *"Put the robot back in the ocean, kid."* .right[-- Oceanographers, everwhere] ??? Make sure you have a way to identify and locate every device, even if you never think you need it. I'm talking about putting some kind of display on a device, even if it's only a single LED, and ensuring you keep track of device location. When you have ten thousand sensors and one is broken, you don't want to drive around trying to make out the serial number of a faded sticker. Devices do go rogue. I've heard more than one story from the world of oceanography where probes go missing because people "rescued" them, or sold them to scrapyards. --- # Management is not a dirty word ??? The best way to do all this is to adopt a remote management platform. At the least you need a secure way to upgrade and shutdown a device. I'm recommending using an agent-oriented datacentre automation tool like saltstack, I'll give some examples of what I've done with this tool later on. The right tool will kill two birds with one stone, you can use it both to construct your master configurations in the lab, and also to deploy updates in the field. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions ## Platforms ## Dev ] --- template: inverse # Development ??? All right, so we chose some hardware and an operating system, and installed at least one blinky light. Now we have to make it blink. --- # Nice languages are portable, memory-safe and asynchronous. ??? I want you to take a hard look at what programming platforms you use. Programming has entered an era where the ability to be rapidly up and running thanks to an ecosystem of open source parts is revolutionising the craft. One of the most important things to look for is a healthy ecosystem of actively maintained components. Javascript and Go are particularly notable for this in that they have component search engines that help you understand who else is using a component. --- # Case study: .green[Javascript] ??? Javascript rules the world. Its not the language anyone would have chosen to be number one but its there, and its getting better every year. You can literally run javascript from chips to cloud, on tiny two dollar microcontrollers, to cloud services. It's the only language that has common support across Azure, AWS and Google cloud. --- .fig40[ ] .spacedown[ # Case Study - .blue[Go]] ??? I'm using Go for my largest project. This is a great fit for container environments because your executables are self-contained. There's built in cross compilation support, so you can work on Windows, Mac or Linux, wherever you're most productive. My CI pipeline can run on stock cloud systems, and spit out ARM native containers. --- # Naughty languages are like a tightrope over a pit of spikes. ??? I coded in C and suchlike for a very long time, and I just don't think there's a good case for widespread use of these tools any more. Its simply far too easy to write brittle or insecure software. Modern languages have learned from these drawbacks and provide a really effective safety net. --- # *'The wifi password is "abc123';cat /etc/passwd#" '* ## Say no to shell scripts. ??? In particular, avoid the temptation to glue things together with shell scripts. It's so tempting, but when you're dealing with malicious user input the attention to detail needed to be secure takes all the convenience out of it. --- # Use, and reuse, a framework. ## Yours, mine, Google, Amazon, Microsoft, Apple, whatever. ??? Frameworks are important for IoT because there are a lot of foundation behaviours in common between devices. Given the time and budget pressure on low cost devices, you don't want to waste resources on adddressing the same problems over and over, or worse still not address them at all. --- # AWS IoT ??? I'm using Amazon's ecosystem in a number of projects. Major challenges like security, intermittent connections and data collection are solved for you. When scale becomes an issue, you have amazon greengrass, which lets you move from a centralised system where everything talks to the cloud, to a more decentralised architecture, where you have a buildling level or floor level element which is under the control of your AWS infrastructure. --- class: bulletsh4, tight # Choose your own framework adventure .leftish[ #### Amazon IoT and Greengrass #### Google IoT #### Azure IoT #### Open Connectivity Foundation IoTivity #### Resin.io #### Mongoose-OS ] ??? If you look at the awful usability of many home automation products, you'll see that vendors have implemented basic behaviours badly when they could have gotten that work done for them by adopting a framework. These are some frameworks I like. The great news for us is there's a lot of vendors going hard at this problem. There is a learning curve. You're initially going to tell yourself "I don't need all this complexity, can I just replicate the couple of parts I need", but particularly in the maintenance part of the lifecycle, a good framework will repay your investment. --- # Containment is complexity management ??? I've gone on record as saying that you shouldn't just screw a linux server to the wall, nor ship software that you don't need. So here I am advocating using a common mainstream hardware and software platform, which breaks both those rules. Containment is how I reconcile those constraints. Software containment causes me to limit the interactions between my parts which helps with security and complexity concerns, and the consequent loose coupling between parts lets me reuse components more effectively. --- # You *can* run Docker on a $6.95 linux computer ??? Docker is the containment engine that everyone knows, but of course there are others. The cloud vendors are all on board with Docker, so this means I can use Amazon's or Google's or Microsoft's container registry services. --- # Use your CI to produce docker images as artifacts ??? The way I work with containers is to have my build system construct and upload containers. This means that reviewers and testers are working with the exact shippable artifact. Your orchestration tools can deliver updated to devices as container updates, and thanks to Docker's image layering, this generally turns out to be quite efficient. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions ## Platforms ## Dev ## QA ] --- template: inverse # Testing ??? Testing is probably the single most critical point for DevOps. The goal we are striving for is more frequent releases, and in order to meet that goal you *have* to reduce the human burden of testing. --- # Total Infrastructure Awareness ## replicate your whole ecosystem ??? The zeroth law here is to actually do testing. To structure your enterprise in a way that provides fast and easy access to test environments. Ideally anyone who wants to test can click a button and have a fresh environment in minutes. --- # No snowflake servers. ## A dev team should have access to disposable instances of everything ??? If it takes days of work to provision a test environment, nobody will ever do it, which means some testing will never get done, or your test system will be out of sync with production. --- # DevOps is a disaster, every day ## and that's good ??? Worse still, if you're not deploying new environments every day you might reach that moment where you need to do it in a hurry and it turns out nobody knows how. I've seen it happen. --- # Painful testing practices beget painfully bad testing ## provide easy test data ??? If you make it painful to test will result in painfully bad testing. With IoT you sometimes have expensive or complicated sensors that are hard to work with in the lab, you must ensure that each component in your system has a mock, and can generate test data. --- # Quick fixes are **good** ## and cheap ??? Actual research data shows that that the sooner you find a bug, the less it costs to fix. If a developer never commits a bug, it costs nothing to fix, so this is the first place that a good toolset pays off. --- # Listen to that annoying hipster tech blogger ## At least four eyeballs per line. ??? If you find the problem during coding, that's where pair programming and code review pays off. --- # Test-before-merge ## Never* "break the build" .footnote[well, almost never] ??? If automated testing tools find a problem, then at least you haven't wasted anyone else's time. --- # Fail fast ## Unit tests first, followed by slower end-to-end tests ??? Now you can tell developers, run the tests every time before you push, but in my experience pixies will fly out of my ear before that happens. So whatever central CI you have needs to fail cheap. Do the fast tests up front, and the slower tests later. --- # Every pair of eyeballs costs $$$ ## (and no, you can't save $-½ by poking one out) ??? Once a change merges into your code repository the costs multiply. You've consumed the time of the person who found it, and then the developer who fixes it, and then the person who confirms its fixed. If you make it to integration or release testing then you have to go back and redo work and redo more testing, and possibly reschedule your release. --- # Do not poke customers with sticks (either) .bottom.right[ (Wherein Christopher does math to "prove" a point)] ??? And if a problem makes it into the hands of customers we're talking about hundreds of times more cost to fix than if you'd found that problem on day one. It's true comprehensive automated testing does take time and money. One study shows it costs around thirty percent more. But the same study shows a ninety percent reduction in defects, and so if your automated tests catch only one bug a week then your testing effort more than pays for itself. --- # A modern embedded system is faster than a Cray 1 ## But that's still **reaaally slow** ??? Now when you're working in IoT you're probably not running an x86 CPU, so how do you compile and test for various embedded CPUs in your Continuous Integration rig. --- # Cross-platform CI pipelines ## Option zero: cross-platform languages are win ??? Well, good language choice and containers to the rescue here. If you're working in Go or NodeJS you will find that you can run your code on any platform, and build your distribution artifacts on any cloud system. --- # Cross-platform CI pipelines ## Option one: Emulate ??? Emulators have gotten really good, too. You can spin up an ARM emulator on a cloud system and boot it up just like a virtual machine. It's remarkably easy. Just a couple of weeks ago amazon announced that their EC2 system manager now works with ARM computers like raspberry pi. So you can build a cluster of Pis and manage them as if they were EC2 instances. --- # Cross-platform CI pipelines ## Option two: Enrol embedded systems in your CI ??? Of course, you do want *some* real devices on your testing menu. Working with ARM and Linux means that your systems can integrate right into whatever CI you're using. I'm using gitlab as it was one of the first systems to build the automated testing tools right into the code repository, but now github and bitbucket have all that too. So go with whatever you know. --- # Case Study: My Pipelines .fig100[ ] .bottom.right[Minimal requirement: one x86 and one ARM server (eg Raspberry Pi)] ??? The way I setup my CI is to set a special tag on the jobs that MUST run on ARM, then configure my ARM workers to only run those tagged jobs In this pipeline there is only one ARM job, which is handled by a pool of ARM systems on premises, the rest run on standard cloud systems. --- # Case Study: My Pipelines .fig100[ ] .bottom.right[Stage 1: common policy checks] ??? There's a pre compilation test phase that runs code quality and static analysis. --- # Case Study: My Pipelines .fig100[ ] .bottom.right[Stage 2: compile and package] ??? * Pipeline builds docker images for x86 and ARM --- # Case Study: My Pipelines .fig100[ ] .bottom.right[Stage 3: Testing (on target arch)] ??? Here's where our ARM CI worker gets used. All but one of those jobs run on cloud hosted x86 servers, except for the testing that needs to run on actual ARM hardware. For that I have some raspberry pi systems which act as workers in the CI farm. --- # Case Study: My Pipelines .fig100[ ] .bottom.right[Stage 4: Deploy to container registry] ??? * Side branches (issue and feature branches) push to dev registry * Master branch pushes to main registry, tagged as staging or live * Auto push to staging registry * Manual push to production registry --- # Case Study: My Pipelines .fig100[ ] ??? You can build all this with as little as two machines, or hundreds if you need them. --- # Case Study: My Pipelines .smallcode[ ```yaml # note the pi build job can be run on x86 because Go is awesome build for pi: stage: build script: - make installdeps image contents ARCH=pi artifacts: paths: - GPIOpower - GPIOpower_docker_pi.tar.gz - GPIOpower_contents_pi.tar.gz # # Run tests on RasPi # test on pi: stage: test tags: - pi script: - make test deploy to staging: stage: deploy environment: name: staging only: - master script: - make push ARCH=x86 ENVIRONMENT=staging - make push ARCH=pi ENVIRONMENT=staging ``` ] ??? This is almost the entire configuation for these pipelines. I use worker tags to force certain jobs to run on ARM. --- # Package separate lab and field artifacts ??? Even with the best of intentions, temporary developer backdoors stick around. So make a sandbox that's safe to put backdoors in. --- # Defeat laziness by making it easier to do the right thing ??? You can't out-lazy programmers. Set up your system so that Every CI build results in both a locked-down and developer-friendly version. You don't ever want to hear "I'll just hack this into the production build and then take it out later". --- .fig50[] # Dashboards as "live tests" ## Containerise your BI stack and write dashboards alongside code ??? Okay, so you made it to release. Now there's ten thousand instances of your code out there, and they're up a pole, or buried under asphalt or fired into space. So don't stop testing. When we run our tests in production we call them dashboards. Bond villains are big on dashboards. You know the funny thing is, I set out to find a bond movie image for this slide, and they all looked rubbish compared to reality. It seems that, like myself, a generation of nerds were inspired by science fiction to build their own supervillain future. That image up there is from Hoot Suite's operations centre, and it only shows about a third of the room. It seems you need more screens to post a tweety than you do to conquery the world. But who's looking at those screens, there's ten or more for every person in the room. We need machines to watch our machines. --- # Dashboards learn "green" state and alert on red ## Obligatory reference to Machine Learning .bottom.right[ Do you want to know **more**?<br>"Continuous Dashboarding" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)] ??? Simplest approach - red line gauges. Artificial stupidity - ignore normal, what's left? Recent ELK stack release (in June) has ML anomaly detection. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions ## Platforms ## Dev ## QA ## Deployment ] --- template: inverse # Deployment ??? All right, so you have some tested code that's been encapsulated in container images, and now you need a device on which to run it. --- .fig50[ ] .spacedown[ # Orchestrate: Never do anything by hand. ] ??? Do not fall into the trap of turning yourself into a robot. Some people say that as a programmer if you ever have to do something more than twice, you should automate it. Well let me save you those two times. If you learn how to use orchestration systems to configure servers, you'll find that pretty soon its quicker to use them even for things that you only have to do once. Also you were wrong about only ever needing to do it once. --- # Build a provisioning workflow ## Customise a clean OS (via ethernet or emulation) ??? Here's something that I've seen over and over. A project starts with developers putting together a quick prototype by starting from a blank OS installation, installing some tools and editing the configuration. Then it's six months later and there's a new version of the OS and nobody remembers how to go from a clean OS to an app ready platform. And thats how many embedded devices end up running eight year old kernels that are riddled with bugs. --- # Robo-configure the target system from a provisioning system ## Then save a filesystem image ??? So, what's a better way. Remote admin of a target machine (saltstack over ssh) Target machine in a VM - eg vagrant Target machine in a container (dockerfile) --- # How do you create a provisioning system? ## Turtles all the way down! .bottom.right[ Do you want to know **more**?<br>.blue[github.com/unixbigot/kevin] ] ??? You pull yourself up by your bootstraps. Saltstack - serverless mode to create the first server --- # Case study - My orchestration scripts .leftish[ 1. Create a read only recovery partition 1. Install SaltStack orchestration minion<br>(now switch protocols) 1. Set timezone, locale, etc. 1. Change default passwords 1. Configure network 1. Provision message bus clients 1. Install language runtimes (nodejs, java etc.) if needed 1. Configure VPN client 1. Fetch initial application containers ] ??? The first part of my process is converting a vendor image to an product base image. All the little tweaks to do your localisations and preferences, get those out of the way. Do them once, but be able to redo or alter them at any moment. So lets look through my configuration stack from the bottom up. Once again, I'm using SaltStack but the techniques are applicable to whichever tool you choose. --- # Zoom level 4: System configuration .smallcode[ ```yaml gpsd: pkg.installed: - pkgs: - gpsd - chrony gpsd-device-configuration: file.replace: - name: /etc/default/gpsd - pattern: ^DEVICES=.* - repl: DEVICES="{{pillar.gps.device}}" - append_if_not_found: True gpsd-running: service.running: - name: gpsd - enable: True ``` ] ??? This runs through a bunch of repetitive configuration that nobody wants to do by hand. --- # Zoom level 3: Device component .smallcode[ ```yaml # fleetvalid/rfid.sls {% set image = reg.path + '/roadcurtain/' + rfid + 'rfid-' + pillar.fleetvalid.arch + ':' + pillar.fleetvalid.docker.tag %} fleetvalid-rfid-image: docker_image.present: - name: {{image}} fleetvalid-rfid: docker_container.running: - image: {{image}} - links: - fleetvalid-aggregator:aggregator - fleetvalid-rfidpower:power - binds: - {{rfid_device}}:{{rfid_device}} - environment: - RCAGGREGATOR: aggregator:9091 - POWER_API: power:80 - privileged: True ``` ] ??? Our deployment process is going to consume the output of our CI pipeline. The CI takes source code and turns it into tested docker images. This config here controls how those images are instantiated as containers. --- # Zoom level 2: Device profile .smallcode[ ```yaml # fleetvalid/inroad_sensor.sls include: - fleetvalid.aggregator - fleetvalid.rfid - fleetvalid.radar - fleetvalid.vibration - fleetvalid.rfidpower ``` ] ??? At the next level up, the orchestration system fetches the all the tested docker images for a particular target, and drops them onto a prepared operating system. So we've closed the circle, we have continuous deployment. --- # Zoom level 1: Device roles .smallcode[ ```YAML # top.sls 'G@roles:controller': - match: compound - salt.syndic - mqtt.relay - mqtt.client - net.hostapd_bridge - net.gpsd - fleetvalid.station-console 'G@roles:sensor': - match: compound - os.initramfs - os.hostname - net.aws.cli - docker - fleetvalid.docker_auth - fleetvalid.service-advertiser 'G@roles:inroad_sensor' - match: compound - fleetvalid.inroad_sensor ``` ] ??? And at the very top we see the configuration that tells saltstack what to put on particular machines, using role designations and other information Base profile for sensor, additional components for particular sensors. Machines are PC or ARM, the majority of the system doesn't have to care. The orchestration system uses the assigned role to load the role configuration, which fetches the tested docker images and drops them onto the prepared operating system. So we've closed the circle, we have continuous deployment. --- # Hey, that all sounds a bit like PaaS ## Yeah, it does. ??? At this point you might be thinking this sounds a lot what platform as a service offerings, like OpenShift or Elastic Beanstalk do. Those systems provide a pre-prepared operating system which you don't have to care about, and you just drop your code into place. Well, you'd be right. There are options for IoT where you get something very similar. I'm going to tell you about four examples, two big, two small. --- # Amazon AWS Greengrass ## IoT PaaS built on AWS IOT + AWS Lambda ??? Amazon Greengrass is one way - if you're familiar with Amazon's Lambda, their functions as a service offering, Greengrass is basically on-premises lambda, you use the same mechanisms but instead of deploying to an invisible container host in the cloud, you're deploying to an on-premises system that you nominate. --- # Resin.io ## IoT PaaS with Linux and Docker .bottom.right[ Do you want to know **more**?<br>[https://resin.io/](https://resin.io)<br> "The Internet of Scary Things" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)] ??? Another platform offering for IoT is resin.io, which provides a base linux image which connects back to their cloud systems. You push your code to their git servers, and their system compiles it and pushes it down to one or more registered devices. --- # Apache MyNewt ## Embedded component-based OS for wireless sensors ??? Here are a couple of platforms that target the smaller embedded systems, too small to run Linux. Apache MyNewt is targeted at applications that may involve billions of devices. Tools first. Security, logging. Portable. Monitoring and management. Open source bluetooth stack. BlueBorne. --- # Mongoose-OS ## Multiplatform embedded OS with cloud integration and remote upgrade .bottom.right[ Do you want to know **more**?<br>[http://mongoose-os.com/](http://mongoose-os.com)<br> "IoT in two Minutes" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)<br> "Javascript Rules My Life (CampJS 2017)" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)] ??? Final platform I want to shout out to is a project called Mongoose OS. It offers the same sort of facility without the central cloud repository, you push a zipfile to a device and it verifes and installs the contents. --- # Automate PKI enrolment ## IoT Makes PKI Easy. .right[-er ] ??? So lets zoom in on that "verifies" part. How do you permit secure remote access to your devices. The obvious way is with X.509 certificates as used in the world wide web. Only the public key infrastructure for the world wide web is basically a global protection racket. The hard part of public key cryptography is authenticating the remote party. That's what certification authorities are for in the web. The alternative is two secret agents exchanging briefcases in the park. Well, it turns out that's exactly what we do. --- # Your "secure key distribution channel" is a cardboard box .leftish[ 1. Build and sign a root certificate 1. Upload root cert to the SaltStack master 1. Create minion certificate 1. Install minion certificate on minion 1. Upload a copy to the master 1. All automatically ] ??? We need a tamper-proof channel to get a unique individual secret key from the factory out to our device. Only our device was made in the factory. So we put the key inside on the assembly line, then ship the device. They way I achieve this in my project is again using SaltStack, during final assembly test we assign an identity to a device and provide it with its security certificate. Now we have trustworthy secure communications with a remote device, that allows us to send digitally signed software updates that the device will trust because they're signed with a certificate that it has carried around since birth. --- # Containerised version control ## Use your docker registry the way it was intended ??? Now we have a secure way to get updates out to devices, our next task is to keep track of what versions each device has, and which are available. Well it turns out our container system does this for us. Docker containers have one or more tags, which are intended for version identification. The tag you might be familiar with is 'latest' which is the default tag that's used if you don't supply one. Well we can define a tag for staging, and demo and debug and whatever else we need, and permanent tags for each previous version. Now we know what version every system has, and we have a way to request a prticular version. --- # Use Salt "grains" to define which container to use .right[(i.e. live, staging, dev or other)] .left[ ```yaml fleetvalid-rfid-image: docker_image.present: - name: ((image)) - force: True fleetvalid-rfid: docker_container.running: - image: ((image)) - links: - fleetvalid-aggregator:aggregator - fleetvalid-rfidpower:power - binds: - ((rfid_device)):((rfid_device)) - environment: - RCAGGREGATOR: aggregator:9091 - POWER_API: power:80 ``` ] ??? We are *this* close to continuous delivery right now. The last thing we need is to control which devices get which versions. Well, our orchestration system is also a configuration management system, which has a pair of key value stores, one maintained at the server, and one on the devices. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions ## Platforms ## Dev ## QA ## Deployment ## Maintenance ] --- template: inverse # Maintenance ??? OK we made it. We're deployed. But we're not out the woods yet. I'm working on devices that are going to be literally set in concrete and expected to keep working for a decade. When they break down you'll need a jackhammer and some earmuffs. --- # Self-care ## FLASH memory longevity tweaks ??? So lets think about flash memory. Each sector can only sustain about 100k write cycles before it becomes an ex-sector. So you don't want to be indiscriminately writing to your disk. Some things you should configure are turning off filesystem access timestamps, and logging to a local ram disk prior to shipping any logs you want to keep to the cloud. --- # Boot to ramdisk ## Sanity check, then proceed to target environment ??? Linux systems have had the ability to boot with an initial ramdisk for a long time, for example when you're running a RAID system, you need somewhere to keep your raid device drivers. You can turn this feature on even in your teensy embedded system so that if your filesystem goes bad due to too many writes you can repair it using the repair utilities kept on the ram disk image. --- # Recovery mode ## (ab)use DHCP ??? But what your concrete encase device goes into a crash loop. Well, I've prepared for this. My installations don't typically use DHCP, but the system does send out an address request packet at boot time, which is usually unanswered. If it does get an answer commanding it to go to recovery mode, it starts a login server and waits for input. But first it uses that conveniently shipped public key certificate to be certain it can trust the command. --- # Liveness monitoring ## If a device goes silent, notify the site custodian ??? So that's some things a device can do to look after itself, what else do we have in our bag of tricks. Well our orchestration system includes a beacon module that transmits some basic performance data, so our mangement system can provide a report of any devices that have gone silent. --- # Sickness monitoring ## Use an audible or visual attention signal (think smoke-alarms) ??? In the event where a device is still alive but has a component failure, we can be a little more helpful and have it call attention to itself. When every lightbulb in a building is self aware, or every smoke alarm or every lamp post, a little bit of help working out just which one is on the fritz comes in very handy. --- # While you were sleeping ## Intermittent connections ??? In the case where devices are not online all the time, how do we manage updates. Well amazon's IoT does a great job of this, where right after a device comes on line it receives an update of all the state changes that were initiated while it was off line. But we can do the same thing with an orchestration system. In saltstack there's a component called the reactor, which responds to defined conditions. So we can trigger actions to occur when a device connects, such as transmitting any pending updates. --- # Kill or Cure ## Feature/component disable ??? Next I want to consider what if there's a problem we can't fix with software. Maybe the the fire alarm is stuck on at 3am. You want to be able to shut down components that require physical repair, rather than have them be a nuisance or a danger. So if you can build feature switches into your software and hardware, you should do so. --- # Kill or Cure ## The Cassini Solution ??? Finally what if a device is out there and you're done supporting it. There's a space probe orbiting saturn right now that has about 20 kilograms of plutonium on board. It's running out of fuel, which means it will eventually smack into a moon. Since we think there could be life on some of Saturn's moons, it wouldn't be neighbourly to give them all tentacle cancer, so in a few days it will use the last of it's fuel to kamikaze dive into saturn itself. If you're building devices that could outlive their software support, I encourage you to think about this situation. The problems recently with factories and businesses still using abandoned windows XP systems shows us that if you can't patch it, you should unplug it. One simple way to do this might be to revoke or stop updating their security certificates. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions ## Platforms ## Dev ## QA ## Deployment ## Maintenance ## Monitoring ] --- template: inverse # Monitoring and Measurement ## Ooops, not today .bottom.right[ Do you want to know **more**?<br> "IoT at Scale" [christopher.biggs.id.au/talk](http://christopher.biggs.id.au/talk)] ??? All right, we're coming to the end. Last thing I normally talk about is the data being emitted from your things, but there isn't time today. Since Sharon gave me permission, I'll leave you with a cross reference to a whole talk on IoT data management, and the offer to present it for you at your invitation. --- layout: true template: callout class: bulletsh4 .crumb[ # Landscape # Challenges # Solutions # Coda ## Summary ] --- .fig40[  ] # Summary .left[ #### Lots of devices, too many to administer by hand #### Swimming in a soup of malware and bad actors #### Choose tools that support quality #### Pipelines for automated build/test/stage #### (Ab)use traditional cloud management tools for IoT Fleet #### Message bus all the things #### Big data now, play later ] ??? All right, let's summarise what I've talked about. We've looked at the threat landscape. We've worked through the product lifecycle from development and testing, to deployment management and retirement. --- layout: true template: callout .crumb[ # Landscape # Challenges # Solutions # Coda ## Summary ## Resources ] --- # Resources, Questions .left[ #### My SaltStack rules for IoT - [github.com/unixbigot/kevin](https://github.com/unixbigot/kevin/) #### Related talks - [http://christopher.biggs.id.au/#talks](http://christopher.biggs.id.au/#talks) #### Me - Christopher Biggs - Twitter: .blue[@unixbigot] - Email: .blue[christopher@biggs.id.au] - Slides, and getting my advice: http://christopher.biggs.id.au/ - Accelerando Consulting - IoT, DevOps, Big Data - https://accelerando.com.au/ ] ??? Thanks for your time today, I'm happy to take questions in the few moments remaining and I'm here all day if you want to have a longer chat. Over to you.